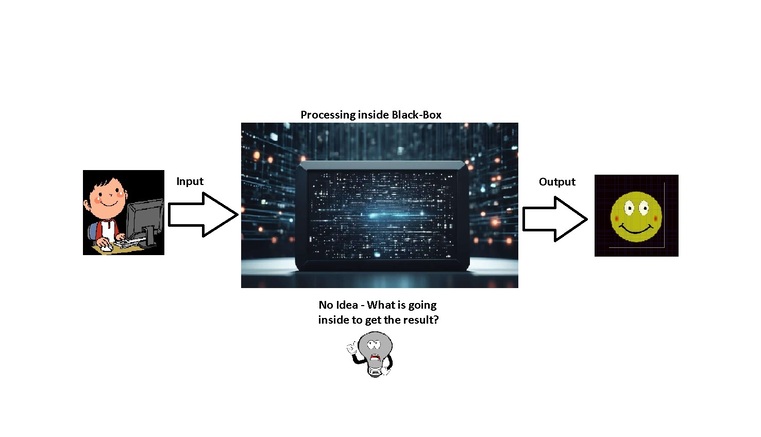

In the modern world, Artificial Intelligence is spreading its legs everywhere. Companies are running a race to improve their AI models to solve human problems. A user, who is placing an input to an AI system, is getting the processed outcome from that AI platform. As a consumer of that AI environment, we do not think about the process that is going on inside an AI. But, as a developer or creator of an AI system, there is a challenge or a so-called problem. Its name is Black Box.

At present, AI models are primarily getting trained based on two ways. In the first one, an AI developer knows how their AI model is solving human problems. Whereas in the second one developer has no idea what is going on inside an AI system during the processing of input data? In a lateral scenario, data is being processed inside a Black Box.

Now, consider a Block-Box based AI model. Here, users can see the inputs, and users can see the outputs. But users do not know exactly how the model arrives at its conclusions, what the factors involved are, and so on.

At present, most of the Artificial Intelligence models, such as OpenAI’s ChatGPT and Meta Llama, are Black Box based AI. These AI models are trained with heavy data sets and complex deep learning processes, and even their own creators do not completely understand their functioning.

These Black Box based models certainly deliver incredible results, but the lack of transparency can sometimes make it hard to trust their outputs. This vague working model can hide decision-making biases, privacy issues, security problems, and other repercussions.

Existence of Black Box AI systems:

These models come into existence for one of two reasons: either their creators make them intentionally, or they get the black boxes as a by-product of their AI model training. In some cases, developers know the functioning, but they do not reveal it to the public to secure intellectual property rights. Most of the conventional, state-based AI models are Black Boxes for this reason. These models are primarily based on a Deep Learning system.

A deep learning system is a type of machine learning. It uses multilayered neural networks. A machine learning model uses a neural network of one or two layers, whereas a deep learning model uses thousands of layers. Here, every layer contains multiple artificial neurons. These neurons are a cluster of code that is designed to replicate the functionality of the human brain.

These deep neural networks have the ability to accept large, unstructured, raw data and identify patterns to generate outputs. This is the grey area of Artificial Intelligence, where an AI can think and create original content that can only be done by a human being.

Issues with Black Box AI systems:

Black Box AI models have fast and vast data processing capability, but they have some challenges on their road to adaptability. Some of them are discussed below:

Convincement in output data:

A user of this model does not know how the decisions were made to get the output. Even though the output of this model is accurate and desirable, validation cannot be done easily without knowing the inside process.

Tuning Model Operation:

When these models are making incorrect and or inaccurate outputs, then it can be hard to adjust or rectify the issue without knowing the internal process of the model. In this situation, a user can not figure out the problematic area.

For example, autonomous vehicles are trained by sophisticated data sets to make real-time decisions. But when these vehicles come under a real-world scenario, they have to make complex decisions to operate in a well-mannered manner. And in this case, most of the time, a wrong decision causes a fatal event. It is hard to find the cause of the incorrect decision due to a black box-based AI model in an autonomous vehicle.

Moral Affairs:

An Artificial intelligence-based model can be biased while making any decision if those biases are present in its data sets during the training phase. With black box models, it can be very hard to find out or pinpoint the presence of Bias.

Regulation of AI:

The black box based AI model is very hard to regulate because it can be hard for an organization or institute to know whether the model is processing the input data based on compliance or not. It is a critical and debatable topic whenever AI comes to the public or end-user interface.

White Box AI:

In contrast to black box AI, a white box AI or XAI (explainable AI) has transparent inner processing. Here, users understand how the Artificial Intelligence is processing their input data and reaching the conclusive output.

The reliability of white box AI is very high; also, it is very hard to make an AI system that is based on a diaphanous processing ability.

Thanks for reading. See you soon with another exploration!